libmdbx (2021-01-29 ... 2021-06-08)

Previous messages

Next messages

29 January 2021

AV

16:57

Artem Vorotnikov

во время их выполнения мой рантайм растаскивает логические треды по системным как ему вздумается - при этом код в корутине выполняется последовательно, строчка за строчкой

Л(

16:57

Леонид Юрьев (Leonid Yuriev)

@vorot93, если вам необходимо работать с БД только из одного "растового" процесса, то можно сделать так:

- открывать БД в эксклюзивном режиме, т.е. другие процессы не смогут с ней работать.

- добавить в libmdbx дополнительную функцию открытия БД с предоставлением callback-ов для замены mutex-ов.

- открывать БД в эксклюзивном режиме, т.е. другие процессы не смогут с ней работать.

- добавить в libmdbx дополнительную функцию открытия БД с предоставлением callback-ов для замены mutex-ов.

17:05

In reply to this message

Во всех приличных фрейворках есть возможность на время монополизировать использование треда (иначе просто беда с любым внешним кодом).

Это _должно_ быть в rust, тогда достаточно "пиннить" текущий тред перед стартом пишущей транзакции и "отпиннивать" после завершения.

Технически это может выглядеть и называть по-разному. Например, изъятие треда из пула и возврат назад, и т.п.

Это _должно_ быть в rust, тогда достаточно "пиннить" текущий тред перед стартом пишущей транзакции и "отпиннивать" после завершения.

Технически это может выглядеть и называть по-разному. Например, изъятие треда из пула и возврат назад, и т.п.

AV

17:23

Artem Vorotnikov

In reply to this message

В расте (а точне, во фреймворке Tokio, который де факто стандарт) можно или запустить корутину на общем многопоточном экзекьюторе (только если она

https://doc.rust-lang.org/nomicon/send-and-sync.html

Send, то бишь все структуры внутри неё можно двигать между потоками), или запустить прибитой к треду от начала и до конца (но можно даже если `!Send`). Динамически прикрепить и открепить нельзя, потому что это риск UB из безопасного кода.https://doc.rust-lang.org/nomicon/send-and-sync.html

Л(

17:27

Леонид Юрьев (Leonid Yuriev)

In reply to this message

Тогда два варианта:

1. Запускать работающие с _пишущими_ транзакциями короутины на отдельном треде.

В целом этом 100% логично, так как пишущие транзакции и операции внутри них строго сериализуются (т.е. строго последовательны).

2. Костылить:

- открывать БД в эксклюзивном режиме, т.е. другие процессы не смогут с ней работать.

- добавить в libmdbx дополнительную функцию открытия БД с предоставлением callback-ов для замены mutex-ов

1. Запускать работающие с _пишущими_ транзакциями короутины на отдельном треде.

В целом этом 100% логично, так как пишущие транзакции и операции внутри них строго сериализуются (т.е. строго последовательны).

2. Костылить:

- открывать БД в эксклюзивном режиме, т.е. другие процессы не смогут с ней работать.

- добавить в libmdbx дополнительную функцию открытия БД с предоставлением callback-ов для замены mutex-ов

NK

17:42

Noel Kuntze

Please add a way to build the original source code without it being in a git repo, so one can use the zip and tar gz archive links of github and still use the tests

17:43

I'd rather not fiddle around with the source again and make all the metadata the Makefile pulls from the git repo settable via the args

AV

17:44

Artem Vorotnikov

In reply to this message

хм, т.е. вариант открыть в эксклюзивном режиме и вместо мутекса подставить пустышку?

Л(

18:03

Леонид Юрьев (Leonid Yuriev)

In reply to this message

Please fill an issue at github if you needed this.

However, so far I don't see any sense in this, because:

- if necessary, you can independently package the entire git repo in tarball.

- git allows you to limit the depth when cloning to reduce overhead.

Nonetheless, I will accept a PR if you implement (for instance) such make-target.

However, so far I don't see any sense in this, because:

- if necessary, you can independently package the entire git repo in tarball.

- git allows you to limit the depth when cloning to reduce overhead.

Nonetheless, I will accept a PR if you implement (for instance) such make-target.

NK

18:03

Noel Kuntze

It's for building a distribution package

18:04

And for obvious reasons there shouldn't be a need to make an extra tarball of the sources to provide to the build mechanism if the source is already available as a tar ball, although without the git archive

18:05

If a package is built from a git repo, it has to have the suffix -git in it and that implies it's unstable

Л(

18:05

Леонид Юрьев (Leonid Yuriev)

In reply to this message

Ну не пустышку, а методы объекта, который вы будете использовать для серилизации/упорядочевания пишущих транзакцию внутри rust-процесса.

NK

18:06

Noel Kuntze

(because the grand majority of the packages that are sourced from git directly, without a tarball, generate specific package versions depending on the current git head in order to version the package)

18:06

(Because otherwise there's no proper versioning between two builts from the same repo at different times and with different contents, but the same build rules/scripts)

18:07

It's been common practice to build from git repos by fetching the tar balls at certain versions and checking their checksum

18:07

the tar ball then doesn't contain the .git folder

18:08

So commonly, the git history of a piece of software is not availabe at build time (because it's not needed, because there's no special versioning required when the release itself is already properly named. E.g. v0.9.2)

Л(

18:08

Леонид Юрьев (Leonid Yuriev)

In reply to this message

Ok. Please file the issue with rationale as you described here.

I'll think about doing it when I have the time.

I'll think about doing it when I have the time.

NK

18:09

Noel Kuntze

At least the Alpine devs will (probably) refuse to accept the package if I just point it at the head or a tagged release because it's a git repo and we can't easily checksum that, AFAIK

18:09

(Reason being the existing automated code for verifying the checksum likely doesn't have the special case of checking a whole git repo)

NK

19:45

Noel Kuntze

When building from source, libmdbx just got a runtime dependency on libgcc_s.so. Do you know from the top of your head if that's normal or if I just forgot to turn of debug building?

19:45

I passed -DNODEBUG=1 via CFLAGS just in case

Л(

19:54

Леонид Юрьев (Leonid Yuriev)

In reply to this message

Nope.

This only depends on the compiler (i.e. GCC) and how it is configured to and implements support the target platform.

For instance, the

In addition, if the library was built with support for a new interface for C++, then there will be a dependency on the C++ runtime, which in turn may have a dependency on

This only depends on the compiler (i.e. GCC) and how it is configured to and implements support the target platform.

For instance, the

libgcc_s.so may contain long division functions, support for C11 atomics, etc...In addition, if the library was built with support for a new interface for C++, then there will be a dependency on the C++ runtime, which in turn may have a dependency on

libgcc_s.so.

30 January 2021

AS

06:03

Alex Sharov

In reply to this message

Вообще это одна из полезных фич: не останавливая процесса, поковыряться в его базе снаружи, позапускать аналитику, погрепать, ...

06:05

Концептуально не имеет большого смысла делать пишущие транзакции на work-stealing пуле, потому что: пишущая транзакция всегда только одна.

06:08

Подумай - может имеет смысл прямо в типах реализовать тот факт что пишущая транзакция всегда только одна, а не претворяться что пишущие транзакции не отличаются от читающих.

06:22

Это наложит на приложение определенные good practices ограничения - возможно это хорошо

06:31

Еще: под нагрузкой - количество читающих транзакций будет ограниченно

mdbx_env_set_maxreaders. Не знаю проблема это или нет.

2 February 2021

Л(

02:32

Леонид Юрьев (Leonid Yuriev)

В ветке

Заинтересованным предлагается поучаствовать в тестировании = для этого достаточно запустить сценарий

Пару слов об этом тестовом сценарии:

- "из каробки" поддерживаются Darwin (MacOS/iOS), FreeBSD и Linux, а для прочих ОС требуется правки скрипта по-месту (для Windows рекомендуется создание RAMDISK).

- сценарий не предполагает фиксированного времени выполнения (потенциально может работать несколько лет), но де-факто завершится либо из-за нехватки ОЗУ, либо достижения лимитов установленных по-умолчанию (размер списка грязных страниц и т.п.).

- предлагается запустить скрипт на 1-N суток и предоставить логи когда он завершиться из-за какой-то ошибки.

devel на github завершен ряд доработок, см. https://github.com/erthink/libmdbx/blob/devel/ChangeLog.mdЗаинтересованным предлагается поучаствовать в тестировании = для этого достаточно запустить сценарий

./test/long_stochastic.sh находясь в корне git repo.Пару слов об этом тестовом сценарии:

- "из каробки" поддерживаются Darwin (MacOS/iOS), FreeBSD и Linux, а для прочих ОС требуется правки скрипта по-месту (для Windows рекомендуется создание RAMDISK).

- сценарий не предполагает фиксированного времени выполнения (потенциально может работать несколько лет), но де-факто завершится либо из-за нехватки ОЗУ, либо достижения лимитов установленных по-умолчанию (размер списка грязных страниц и т.п.).

- предлагается запустить скрипт на 1-N суток и предоставить логи когда он завершиться из-за какой-то ошибки.

Л(

20:39

Леонид Юрьев (Leonid Yuriev)

Would you like get the next release now or wait for a more features?

Anonymous poll

- Release now 3 votes, chosen vote

- Wait for a features from the TODO list

3 votes

NK

21:06

Noel Kuntze

Release often please. That way distros don't need to make and apply patches in between, but can just point the source URL at the newest tarball

21:06

It saves 15 to 60 minutes per release

21:06

(because you need to check if the package afterwards still builds fine manually

21:06

)

21:07

(Also, it looks much cleaner)

21:08

It's also better for new users/developers, because the newest release will include the most bugfixes. Otherwise they first need to go over the issue tracker or the devel branch to check for patches that aren't in the newest release yet

Л(

NK

23:02

Noel Kuntze

Hi, I started work on writing Python 3 bindings for libmdbx, but the mdbx.h++ file is more than 4500 lines in size. Can I just skip all the helper functions you defined together with their bodies, or are they necessary for the functioning of libmdbx?

23:03

Also, why *are* they defined in the header file, instead of just being declared?

4 February 2021

NK

01:33

Noel Kuntze

Nvm, I figured it out. You can't inline them otherwise. :P

01:39

@erthink I implemented setting the MDBX_ vars during compile time from the cmdline: https://gitlab.alpinelinux.org/alpine/aports/-/blob/c0979bac716b859b4649e7cc685f4ec1816d0d6e/testing/libmdbx/0001-Make-MDBX_-vars-specifyable-via-args.patch

Л(

16:05

Леонид Юрьев (Leonid Yuriev)

In reply to this message

It's good that you came to this conclusion yourself.

There is no perfect solution here:

- Almost all the functions there do not make sense to take out in the DSO, since the cost of preparing arguments in the stack is commensurate with the body of the functions when ones inlined.

- In addition, the visibility of the function body complements the documentation - so it is easier to understand it and how it works.

- Unfortunately, all this increases the size of the header file. But still, I prefer to supply a single header file and recommend using convenient IDEs to work with the code.

There is no perfect solution here:

- Almost all the functions there do not make sense to take out in the DSO, since the cost of preparing arguments in the stack is commensurate with the body of the functions when ones inlined.

- In addition, the visibility of the function body complements the documentation - so it is easier to understand it and how it works.

- Unfortunately, all this increases the size of the header file. But still, I prefer to supply a single header file and recommend using convenient IDEs to work with the code.

16:11

In reply to this message

This easy solution, but it is wrong (

All the hassle with getting information from the

Your solution certainly works, but it makes it easy to make an error by simply forgetting to update the value passed in the arguments.

All the hassle with getting information from the

git is necessary to avoid human error when generating version information.Your solution certainly works, but it makes it easy to make an error by simply forgetting to update the value passed in the arguments.

16:13

@thermi, as I wrote before, please submit the issue at github with the reasoning you provided here earlier.

9 February 2021

AS

07:24

Alex Sharov

what is right way to get GC size in runtime -

mdbx_dbi_stat(FREE_DBI)?

Л(

07:27

Леонид Юрьев (Leonid Yuriev)

In reply to this message

This way you can get the number of entries in the GC/Freelist and the space ones occupied, but not the number of pages placed in the GC/Freelist.

AS

07:31

Alex Sharov

In reply to this message

yes, I looking for size of GC DBI itself, not amount of page ids holded inside. thank

Л(

07:56

Леонид Юрьев (Leonid Yuriev)

На всякий, не забывайте, что обновление GC происходит только при коммите, т.е. если текущая транзакция много помешает или берет из GC, то изнутри её вы в GC этого не увидите.

AS

07:59

Alex Sharov

да, печатаю размеры DBI и GC сразу после коммита, а всякую дебажную статистику TxInfo (

space_dirty, space_retired) сразу перед коммитом.

Л(

08:02

Леонид Юрьев (Leonid Yuriev)

Ещё в space_dirty сейчас есть забытый баг - величина считается по кол-во грязных страниц, без учёта что некоторые могут быть overflow/large.

AS

08:03

Alex Sharov

у нас осталось не много overflow страниц, будем считать что это не страшно.

10 February 2021

basiliscos invited basiliscos

Л(

20:15

Леонид Юрьев (Leonid Yuriev)

@AskAlexSharov, могли бы уточнить зачем вам были нужны вложенные транзакции и почему теперь их вычищаете?

11 February 2021

AS

05:19

Alex Sharov

In reply to this message

Мы искали где-бы их применить. Не нашли потому что в lmdb вложенные транзакции не бездимитны. Ну и вообще похоже что нет юзкейсов у нас для них.

AS

06:11

Alex Sharov

Пред-предыдущая версия софта работала на leveldb - совсем без транзакций.

AS

06:56

Alex Sharov

в биндинги я их верну сегодня. просто автор не знал что это app-agnostic биндинги

Л(

07:13

Леонид Юрьев (Leonid Yuriev)

👍

Л(

07:45

Леонид Юрьев (Leonid Yuriev)

Вложенные транзакции нужны только если логика работы приложения предполагает их отмену в каких-то ситуациях.

AS

Л(

07:59

Леонид Юрьев (Leonid Yuriev)

👍

AS

Ruslan invited Ruslan

R

23:30

Ruslan

Привет, подскажите пожалуйста как правильно на lmdbx/lmdb реализовывать LRU кеш? Сейчас реализовал простую очередь, но она все равно не работает: задал mdb_env_set_mapsize равный ~300mb, кидаю значения с размерами от 7 до 15 mb, через код контролирую заполнение базы не более чем на 80% (удаляю то что выпадает из очереди). В итоге получаю "MDBX_MAP_FULL: Environment mapsize limit reached", через MDBX_stat вижу, что база занимает 212353024 байт, а лимит 300003328 байт. Что я делаю не так? Минимальный пример - https://pastebin.com/2NdKy5aj

Л(

23:40

Леонид Юрьев (Leonid Yuriev)

In reply to this message

TL;DR;

У вас там (видимо, скорее всего) где-то текут (не закрываются) читающие транзакции, либо данные не удаляются (транзакции абортятся).

Советую запустить под отладчиком и между транзакциями посмотреть:

- заполненность БД посредством

- активных читателей посредством

У вас там (видимо, скорее всего) где-то текут (не закрываются) читающие транзакции, либо данные не удаляются (транзакции абортятся).

Советую запустить под отладчиком и между транзакциями посмотреть:

- заполненность БД посредством

mdbx_chk и/или mdbx_stat;- активных читателей посредством

mdbx_stat -r.

23:42

Еще хотелось-бы чтобы вы попробовали C++ API.

R

23:43

Ruslan

In reply to this message

Да, сейчас проверю замечания, спасибо. Код наколеночный, извиняюсь

Л(

23:52

Леонид Юрьев (Leonid Yuriev)

И на всякий случай, для понимания:

Большие/длинные значения в MDBX/LMDB хранятся в "overflow pages" (устоявшийся термин), которые физически является последовательностями смежных страниц.

Т.е. для размещения 1 mb данных нужно 1024/4 = 256 свободных страниц БД, расположенных последовательно.

Поэтому:

- места в БД может не хватать из-за фрагментации.

- лучше при создании БД задать максимальный размер страницы (64K).

Большие/длинные значения в MDBX/LMDB хранятся в "overflow pages" (устоявшийся термин), которые физически является последовательностями смежных страниц.

Т.е. для размещения 1 mb данных нужно 1024/4 = 256 свободных страниц БД, расположенных последовательно.

Поэтому:

- места в БД может не хватать из-за фрагментации.

- лучше при создании БД задать максимальный размер страницы (64K).

R

23:52

Ruslan

In reply to this message

Получается как то так https://pastebin.com/wqUw1166 , в целом по коду транзакции течь не должны, читатели всегда абортятся, а писатели закрываются через коммит, но если не было коммита, тогда они заабортятся.

23:54

In reply to this message

Как раз на фрагментацию и грешим, можно ли на рантайме как то уменьшить фрагментацию или быть уверенным заранее, что буфер размером 10мб сможет уместиться в базу? Копировать базу не вариант

Л(

23:55

Леонид Юрьев (Leonid Yuriev)

In reply to this message

Если в

retained нули, то значит читатели не мешают переработки мусора.

R

12 February 2021

Л(

00:02

Леонид Юрьев (Leonid Yuriev)

In reply to this message

1.

При попытке помещения очередного значения будет либо SUCCESS, либо ошибка из-за отсутствия места.

2.

Сейчас это приведет к пометке транзакции как ошибочной, но можно сделать правку чтобы просто возвращалась ошибка.

Либо добавить в API функцию проверки наличия места и/или поиска максимального span of free pages.

3.

Исторический LMDB/MDBX позволяют хранить большие значения, но умеют это только в последовательных страницах (линейных кусках БД).

В libmdbx это поведение изменено не будет, а в MithrilDB появиться опция = можно будет использовать потоковое API для записи/чтения BLOB-ов.

Соответственно, проблему нехватки места из-за фрагментации можно будет обойти.

При попытке помещения очередного значения будет либо SUCCESS, либо ошибка из-за отсутствия места.

2.

Сейчас это приведет к пометке транзакции как ошибочной, но можно сделать правку чтобы просто возвращалась ошибка.

Либо добавить в API функцию проверки наличия места и/или поиска максимального span of free pages.

3.

Исторический LMDB/MDBX позволяют хранить большие значения, но умеют это только в последовательных страницах (линейных кусках БД).

В libmdbx это поведение изменено не будет, а в MithrilDB появиться опция = можно будет использовать потоковое API для записи/чтения BLOB-ов.

Соответственно, проблему нехватки места из-за фрагментации можно будет обойти.

R

Л(

00:08

Леонид Юрьев (Leonid Yuriev)

In reply to this message

К пункту 2 выше можно добавить еще один workaround - использовать вложенные транзакции, тогда при ошибке поломается вложенная транзакция, а родительская останется целой.

R

00:23

Ruslan

In reply to this message

А в каком порядке выделяются станицы для данных? В моем примере я вообще то последовательно удаляю наиболее старые данные используется простую очередь fifo, то есть при определенных настройках думаю можно было бы избежать фрагментации

Л(

00:34

Леонид Юрьев (Leonid Yuriev)

In reply to this message

Если не уходить в детальное объяснение, то в "обычном" порядке, но не трогая MVCC-снапшоты используемые читателями.

Если у вас много асинхронно работающих читателей, то они могут блокировать переработку мусора.

Чтобы поймать такую ситуацию используйте

Если у вас много асинхронно работающих читателей, то они могут блокировать переработку мусора.

Чтобы поймать такую ситуацию используйте

mdbx_env_set_hsr(), см https://erthink.github.io/libmdbx/group__c__err.html#gaedc09dd7e0634163be8aafdf00d7db77.MDBX_COALESCE в вашем случае особо не влияет, поскольку при поиске места движок будет читать и объединять записи из GC пока не найдет последовательности достаточной длинны, т.е. MDBX_COALESCE как-бы включается вынужденно.

00:36

In reply to this message

Еще, при большом размере БД (большом кол-ве страниц), возможно, нужно увеличить лимит

MDBX_opt_rp_augment_limit, см https://erthink.github.io/libmdbx/group__c__api.html#gga671855605968c3b1f89a72e2d7b71eb3a9fe64bcad43752eac6596cbb49a2be2d.

R

00:51

Ruslan

In reply to this message

В том примере кода есть только читатели, которые высчитвают размер бд через mdbx_dbi_stat и тут же абортятся. Читатели с писателями в примере при этом не пересакаются. Можно убрать читателей и высчитвать размер самим, но это тоже не помогает

Л(

01:27

In reply to this message

Чуть поправил ваш код, чтобы для скорости БД была в

Запустил под отладчиком, поставит точку остановки на #226: throw std::runtime_error(std::string("exception :") + mdbx_strerror(res));

Поймал

mem: 218796032/300003328 bytes, inserted = 905, shrinked = 888, real_used_space = 218684634, used_keys = 17

mem: 227213312/300003328 bytes, inserted = 929, shrinked = 906, real_used_space = 227109072, used_keys = 23

mem: 223158272/300003328 bytes, inserted = 952, shrinked = 932, real_used_space = 223030154, used_keys = 20

При этом

Не останавливая отладку запустил

Processing '@GC'...

- key-value kind: ordinal-key => single-value, flags: integerkey (0x08), dbi-id 0

- page size 4096, entries 16

- b-tree depth 1, pages: branch 0, leaf 1, overflow 20

- fixed key-size 8

transaction 2029, 918 pages, maxspan 907

transaction 2030, 926 pages, maxspan 926

transaction 2031, 1018 pages, maxspan 1018

transaction 2032, 1018 pages, maxspan 682

transaction 2033, 1018 pages, maxspan 1018

transaction 2034, 1018 pages, maxspan 1018

transaction 2035, 1018 pages, maxspan 1018

transaction 2036, 1018 pages, maxspan 749

transaction 2037, 1018 pages, maxspan 1018

transaction 2038, 1018 pages, maxspan 1018

transaction 2039, 1018 pages, maxspan 556

transaction 2040, 1017 pages, maxspan 726

transaction 2041, 18 pages, maxspan 6

transaction 2042, 3411 pages, maxspan 3407

transaction 2043, 20 pages, maxspan 4

transaction 2044, 3411 pages, maxspan 3407

- summary: 16 records, 0 dups, 128 key's bytes, 75596 data's bytes, 0 problems

- space: 73243 total pages, backed 73243 (100.0%), allocated 72285 (98.7%), remained 958 (1.3%), used 53402 (72.9%), gc 18883 (25.8%), detained 3411 (4.7%), reclaimable 15472 (21.1%), available 164

Другими словами, вполне очевидно в GC нет последовательности из 3699 свободных страниц, т.е. причина в фрагментации.

/dev/shm/.Запустил под отладчиком, поставит точку остановки на #226: throw std::runtime_error(std::string("exception :") + mdbx_strerror(res));

Поймал

MDBX_MAP_FULL = -30792 с хвостом в консоли:mem: 218796032/300003328 bytes, inserted = 905, shrinked = 888, real_used_space = 218684634, used_keys = 17

mem: 227213312/300003328 bytes, inserted = 929, shrinked = 906, real_used_space = 227109072, used_keys = 23

mem: 223158272/300003328 bytes, inserted = 952, shrinked = 932, real_used_space = 223030154, used_keys = 20

При этом

db_value.iov_len = 15149103, т.е. (15149103 + 4096 - 1) / 4096 = 3699 страниц.Не останавливая отладку запустил

./mdbx_chk -cvvvv /dev/shm/, среди прочего вижу:Processing '@GC'...

- key-value kind: ordinal-key => single-value, flags: integerkey (0x08), dbi-id 0

- page size 4096, entries 16

- b-tree depth 1, pages: branch 0, leaf 1, overflow 20

- fixed key-size 8

transaction 2029, 918 pages, maxspan 907

transaction 2030, 926 pages, maxspan 926

transaction 2031, 1018 pages, maxspan 1018

transaction 2032, 1018 pages, maxspan 682

transaction 2033, 1018 pages, maxspan 1018

transaction 2034, 1018 pages, maxspan 1018

transaction 2035, 1018 pages, maxspan 1018

transaction 2036, 1018 pages, maxspan 749

transaction 2037, 1018 pages, maxspan 1018

transaction 2038, 1018 pages, maxspan 1018

transaction 2039, 1018 pages, maxspan 556

transaction 2040, 1017 pages, maxspan 726

transaction 2041, 18 pages, maxspan 6

transaction 2042, 3411 pages, maxspan 3407

transaction 2043, 20 pages, maxspan 4

transaction 2044, 3411 pages, maxspan 3407

- summary: 16 records, 0 dups, 128 key's bytes, 75596 data's bytes, 0 problems

- space: 73243 total pages, backed 73243 (100.0%), allocated 72285 (98.7%), remained 958 (1.3%), used 53402 (72.9%), gc 18883 (25.8%), detained 3411 (4.7%), reclaimable 15472 (21.1%), available 164

Другими словами, вполне очевидно в GC нет последовательности из 3699 свободных страниц, т.е. причина в фрагментации.

R

Л(

01:41

Леонид Юрьев (Leonid Yuriev)

In reply to this message

В качестве эксперимента сделал

Но только с предварительным удалением БД и до открытия, когда можно задать размер страницы.

Т.е. динамический размер (с увеличением, но без уменьшения) от минимального до вдвое большего и с максимальным размером страницы.

Результат:

- уже 100К итераций.

- размер БД 320192 Кб.

+ средний размер использованного места около 222 Мб.

mdbx_env_set_geometry(env, -1, 0, cache_size * 2, -1, 0, 65536) вместо mdbx_env_set_mapsize().Но только с предварительным удалением БД и до открытия, когда можно задать размер страницы.

Т.е. динамический размер (с увеличением, но без уменьшения) от минимального до вдвое большего и с максимальным размером страницы.

Результат:

- уже 100К итераций.

- размер БД 320192 Кб.

+ средний размер использованного места около 222 Мб.

R

Л(

02:06

Леонид Юрьев (Leonid Yuriev)

In reply to this message

На всякий для FIFO (когда вставка всегда идёт в порядке возрастания ключей) лучше добавлять данные с опцией

MDBX_APPEND.

Л(

13 February 2021

AS

13:27

Alex Sharov

hypotetical question: is it possible to implement drop_prefix (or drop_range) method - which will not touch leaf pages (or touch only first/last leaf pages only)? For example by visiting only branch nodes and adding id of all leaf nodes to GC.

also - we already have cursor_delete(

reason why i'm asking: we some fear like "deleting large range may take unpredictable time - because we need to read all data in range" - I wounder - how real is our fear.

also - we already have cursor_delete(

MDBX_NODUPDATA) - to drop sub-db - how it works now? (I don't understand from source code).reason why i'm asking: we some fear like "deleting large range may take unpredictable time - because we need to read all data in range" - I wounder - how real is our fear.

Л(

15:03

Леонид Юрьев (Leonid Yuriev)

In reply to this message

1.

Yes, it is possible (delete a range without reading most of a leaf pages).

Please file an issue on the github.

2.

Essentially MDBX stores multi-values (aka duplicates) in a nested b+tree, i.e. single key + subtree of values.

So the`mdbx_cursor_del(MDBX_ALLDUPS)` just drops such nested b+tree.

3.

While dropping a whole tree of pages we can avoid read the leaf pages, since only page numbers is needed but not the data.

This is true for canonical b-tree, where leaf pages just holds data but not refs to an any other pages.

But this is false in case when b+tree includes an overflow/large page(s), i.e. at least one leaf page has a reference to an overflow/large page(s).

Yes, it is possible (delete a range without reading most of a leaf pages).

Please file an issue on the github.

2.

Essentially MDBX stores multi-values (aka duplicates) in a nested b+tree, i.e. single key + subtree of values.

So the`mdbx_cursor_del(MDBX_ALLDUPS)` just drops such nested b+tree.

3.

While dropping a whole tree of pages we can avoid read the leaf pages, since only page numbers is needed but not the data.

This is true for canonical b-tree, where leaf pages just holds data but not refs to an any other pages.

But this is false in case when b+tree includes an overflow/large page(s), i.e. at least one leaf page has a reference to an overflow/large page(s).

AS

16:55

Alex Sharov

It means - if place “big range” in 1 dupsort sub-tree - then already can drop sub-tree (instead of creating new api), and technically it’s 1 leaf page touch (no overflow pages here). If yes, then I will not file issue for now - will discuss concept with our team first.

Л(

17:28

Леонид Юрьев (Leonid Yuriev)

In reply to this message

Yes, if you need delete a whole

dupsort sub-tree for the key - this is done by current API.

AS

17:30

Alex Sharov

In reply to this message

And it’s equal to 1 leaf page touch (no overflow), right?

Л(

17:33

Леонид Юрьев (Leonid Yuriev)

No.

This required CoW'ing a stroke of pages from the root to the leaf of a main tree.

This required CoW'ing a stroke of pages from the root to the leaf of a main tree.

AS

17:33

Alex Sharov

Yes, branch pages are ok

14 February 2021

Денис Гура invited Денис Гура

15 February 2021

EdgarArout invited EdgarArout

Л(

19:19

Леонид Юрьев (Leonid Yuriev)

Завтра планирую быть на http://www.mcst.ru/elbrus-tech-day-1617-fevralya-2021-goda

16 February 2021

NK

14:22

Noel Kuntze

Hi, I need some help writing the type caster for the Python bindings. I'd appreciate any help! I did a short summary of what I need help with in issue #147 (https://github.com/erthink/libmdbx/issues/147).

Л(

14:34

Леонид Юрьев (Leonid Yuriev)

In reply to this message

Thank you, I will definitely look and answer you later.

17 February 2021

NK

21:29

Noel Kuntze

Hey Leonid, did you take a look?

18 February 2021

Л(

12:11

Леонид Юрьев (Leonid Yuriev)

In reply to this message

I'm sorry for the delay, I'll answer just now.

NK

16:35

Noel Kuntze

Thank you for your comment on the threat

22 February 2021

b

19:08

basiliscos

@erthink , поскажите, а с помощью курсора можно сканирование по префиксу ключа произвести? Т.е. у меня префикс

abc_*, хочу выбрать все ключи со значениями, по этой бинарной маске?

Л(

20:06

Леонид Юрьев (Leonid Yuriev)

In reply to this message

Да, конечно, как в любой СУБД.

Установите курсор на первый подходящий ключ и итерируйте вперед пока не выйдите за пределы нужного префикса.

Установите курсор на первый подходящий ключ и итерируйте вперед пока не выйдите за пределы нужного префикса.

b

20:13

basiliscos

спасибо )

23 February 2021

Виталий invited Виталий

В

12:17

Виталий

Здравствуйте!

У меня данные гарантированно идут в алфавитном порядке. В leveldb они таким же образом и записаны на диск, что повышает скорость чтения. Предоставляет ли libmdbx такую возможность?

У меня данные гарантированно идут в алфавитном порядке. В leveldb они таким же образом и записаны на диск, что повышает скорость чтения. Предоставляет ли libmdbx такую возможность?

Л(

17:32

Леонид Юрьев (Leonid Yuriev)

In reply to this message

Если просто вставлять данные в пустую БД в порядке сортировки, то на диске страницы с данными будут преимущественно в последовательном порядке.

Но при этом заполнение страниц будет 50% (при полном заполнении крайняя листовая страницы будет разделаться на две, и так далее).

Для полного заполнения страниц при последовательной вставке следует использовать опции

Кроме этого "выпрямить" порядок страниц можно сделав копию БД с компактификаций (утилитой

Но при этом заполнение страниц будет 50% (при полном заполнении крайняя листовая страницы будет разделаться на две, и так далее).

Для полного заполнения страниц при последовательной вставке следует использовать опции

MDBX_APPEND и MDBX_APPENDDUP.Кроме этого "выпрямить" порядок страниц можно сделав копию БД с компактификаций (утилитой

mdbx_copy -c или mdbx_env_copy()), а также сделав дамп-восстановление утилитами mdbx_dump + mdbx_load).

В

25 February 2021

AS

05:02

Alex Sharov

Подскажите, Чего мне подебажить чтобы понять причину: https://github.com/erthink/libmdbx/issues/164

05:14

Еще мы видим что mdbx на наших данных почему-то на 13% лучше сжимается при запуске на “zfs with enabled compression”, но не знаем почему :-)

Л(

11:14

Сейчас я занят и не могу отвлекаться/переключаться.

AS

11:24

Alex Sharov

sure

Л(

23:27

Леонид Юрьев (Leonid Yuriev)

In reply to this message

В целом всё выглядит довольно странно.

Пока у меня три "гипотезы":

- в libmdbx есть какой-то недочет, который проявляется в вашем сценарии.

- некая особенность libmdbx провоцирует/усугубляет некий недочет в ядре ОС, либо в драйвере диска или (даже) в его внутренней прошивке.

- наибольшее подозрение сейчас вызывает madvise.

С другой стороны, лучшее сжатие и одновременно худшая производительность могут быть объяснены увеличением (не важно по какой причине) реального размера БД.

Но, наверное, вы бы это заметили?

———

С учетом того, что тестовые прогоны достаточно дороги (как минимум по времени), я бы попросил вас сделать разумный минимум:

0.

Не стоит использовать

Это скорее внутренняя около-отладочная опция для проверки влияния авто-компактификации на производительность и другие показатели.

1. Если сохранились базы после прогонов с LMDB и MDBX, то предоставить вывод

Если же базы НЕ созранились, до сделать это после очередного прогона.

Причем желательно сохранить базу MDBX (на момент явного отставания), чтобы после (при необходимости) посмотреть её более детально.

2. В дальнейшем запускать тесты через утилиту

Так мы получим от системы базовую информацию по потраченным ресурсам, что (предположительно) позволит понять что происходит или сузить гипотезы.

3. В MDBX отключить

Т.е. есть подозрение что madvise-подсказки выдаются либо неверно/неоптимально, либо проявляют недочет в ядре.

———

Дополнительно стоит собирать и добавить на график(и) метрики от

Видимо лучше будет сделать два graphana-like графика по-отдельности для LMDB и MDBX.

Тогда можно будет увидеть корреляцию деградацию с изменением каких-то параметров.

Но пока этого делать не стоит, точнее не стоит если вам что-то подобное не нужно самими.

———

Кроме вышесказанного, я постараюсь прогнать тесты для сценария БД > ОЗУ.

Пока у меня три "гипотезы":

- в libmdbx есть какой-то недочет, который проявляется в вашем сценарии.

- некая особенность libmdbx провоцирует/усугубляет некий недочет в ядре ОС, либо в драйвере диска или (даже) в его внутренней прошивке.

- наибольшее подозрение сейчас вызывает madvise.

С другой стороны, лучшее сжатие и одновременно худшая производительность могут быть объяснены увеличением (не важно по какой причине) реального размера БД.

Но, наверное, вы бы это заметили?

———

С учетом того, что тестовые прогоны достаточно дороги (как минимум по времени), я бы попросил вас сделать разумный минимум:

0.

Не стоит использовать

MDBX_ENABLE_REFUND=0, по крайней мере в production.Это скорее внутренняя около-отладочная опция для проверки влияния авто-компактификации на производительность и другие показатели.

1. Если сохранились базы после прогонов с LMDB и MDBX, то предоставить вывод

mdb_stat -aef и mdbx_stat -aef.Если же базы НЕ созранились, до сделать это после очередного прогона.

Причем желательно сохранить базу MDBX (на момент явного отставания), чтобы после (при необходимости) посмотреть её более детально.

2. В дальнейшем запускать тесты через утилиту

/usr/bin/time -v (лучше не путать с time встроенной в bash).Так мы получим от системы базовую информацию по потраченным ресурсам, что (предположительно) позволит понять что происходит или сузить гипотезы.

3. В MDBX отключить

madvise(), сейчас я добавлю соответствующую опцию сборки.Т.е. есть подозрение что madvise-подсказки выдаются либо неверно/неоптимально, либо проявляют недочет в ядре.

———

Дополнительно стоит собирать и добавить на график(и) метрики от

getrusage() (за вычетом не поддерживаемых на Linux, см. man getrusage), а также основные параметры "геометрии БД": backed, allocated, used, gc (mdbx_stat -ef) и соответствующие им для LMDB (mdb_stat -ef, но там меньше информации и я не помню подробностей).Видимо лучше будет сделать два graphana-like графика по-отдельности для LMDB и MDBX.

Тогда можно будет увидеть корреляцию деградацию с изменением каких-то параметров.

Но пока этого делать не стоит, точнее не стоит если вам что-то подобное не нужно самими.

———

Кроме вышесказанного, я постараюсь прогнать тесты для сценария БД > ОЗУ.

26 February 2021

𝓜𝓲𝓬𝓱𝓪𝓮𝓵 invited 𝓜𝓲𝓬𝓱𝓪𝓮𝓵

?

02:24

𝓜𝓲𝓬𝓱𝓪𝓮𝓵

Здравствуйте! Я правильно понимаю, что бд не поддерживает шифрование из коробки?

Л(

02:30

Леонид Юрьев (Leonid Yuriev)

In reply to this message

Да, правильно.

В libmdbx сквозного/прозрачного шифрования нет и не будет, так как это требует:

- изменение формата БД, т.е. нарушение совместимости.

- поддержку внутреннего кэша расшифрованных страниц, что нарушает исходно заложенный дизайн и предполагает другие сценарии использования.

Такое шифрование планируется в MithrilDB и LMDB 1.0 (уже сейчас есть в devel-прототипе в ветке master3).

Если вам требуется шифрование с libmdbx, то потребуется делать "руками" перед помещением данных в БД и после чтения.

В libmdbx сквозного/прозрачного шифрования нет и не будет, так как это требует:

- изменение формата БД, т.е. нарушение совместимости.

- поддержку внутреннего кэша расшифрованных страниц, что нарушает исходно заложенный дизайн и предполагает другие сценарии использования.

Такое шифрование планируется в MithrilDB и LMDB 1.0 (уже сейчас есть в devel-прототипе в ветке master3).

Если вам требуется шифрование с libmdbx, то потребуется делать "руками" перед помещением данных в БД и после чтения.

?

02:30

𝓜𝓲𝓬𝓱𝓪𝓮𝓵

Хорошо, спасибо

AS

05:43

Alex Sharov

In reply to this message

Спасибо. Сделаю. 0. Я просто проверял что существующие ручки не помогают изменить картину (в проде использовать будем refund и coalesce). 5. Графана у нас есть, но я хочу туда протащить pagecachemiss из cachestat (https://github.com/iovisor/bcc). 6. Без внешнего сжатия база получается на 5% больше, со сжатием на 13% меньше. Причиной того что со сжатием работает быстрее может быть тот факт что zfs дефолтный размер блока сжатия - 128Кb.

Л(

13:57

Леонид Юрьев (Leonid Yuriev)

In reply to this message

Просьба по-возможности отписывать в issue на github.

Тогда эта информация индексируется и становится доступной остальным.

Тогда эта информация индексируется и становится доступной остальным.

Л(

15:52

Леонид Юрьев (Leonid Yuriev)

In reply to this message

1.

Из этого следует, что сначала нужно понять откуда +5%.

Предположительно дело в том, что в libmdbx достаточно строго выполняется стратегия слияния страниц = если страница заполнена менее чем на 25%, то она сливается с наименее заполненным соседом.

В среднем это обеспечивает более-менее рациональный баланс между рыхлостью и скоростью вставок:

- если страницы заполнены слишком сильно, то при вставках будет часто производиться разделение страниц (со вставкой ключей-ссылок в родительские страницы и т.д.);

- если страницы заполнены слишком рыхло, то страниц просто много, а БД просто больше по размеру;

- т.е. компромисс между потерями на вводе-выводе и затратами на разделение страниц.

Золотого сечения тут нет, но в среднем для больших БД выгоднее заполнять страницы плотнее, и наоборот для маленьких БД.

Можно попробовать подкрутить этот параметр, так чтобы для больших БД уже чувствовалась разница, а для маленьких деградация была еще не заметна.

Сейчас я сделаю соответствующие правки, чтобы вы могли попробовать.

А в дальнейшем - вынести в runtime опции, если это даст положительный эффект.

2.

Лучшее сжатие частично можно объяснить рыхлостью страниц, чуть более регулярной структурой и обнуленными хвостами large/overflow страниц.

Тем не менее, для меня тут нет полной ясности.

В том числе, на 13% лучше - это относительно сжатого размера LMDB, или разница в сжатии?

3.

Если на zfs со сжатием работает быстрее, то из-за суммы двух причин:

- сжатие уменьшает I/O bandwidth с диском.

- при чтении блоки по 128К вынуждают page cache работать не с отдельными 4К страниц, а как-бы блоками по 32 страницы.

т.е. это некий micro read ahead, который увеличивает I/O bandwidth, но немного уменьшает IOPS.

- при записи блоки по 128К вынуждают коагулировать все write requests в пределах блока в одну IO-операцию.

т.е. это некий write micro batching, который увеличивает I/O bandwidth, но немного уменьшает IOPS.

Итоговый эффект принципиально зависит от того как много I/O операций удается схлопнуть в одну (от 1 до 32) и от суммарных накладных расходов на одну I/O операцию (как в ядре, так и у диске, включая seek / pagezone switch).

Тем не менее, если вы видите выгоду, то вероятно есть смысл попробовать более крупный размер страниц в БД (8/16/32/64K).

Из этого следует, что сначала нужно понять откуда +5%.

Предположительно дело в том, что в libmdbx достаточно строго выполняется стратегия слияния страниц = если страница заполнена менее чем на 25%, то она сливается с наименее заполненным соседом.

В среднем это обеспечивает более-менее рациональный баланс между рыхлостью и скоростью вставок:

- если страницы заполнены слишком сильно, то при вставках будет часто производиться разделение страниц (со вставкой ключей-ссылок в родительские страницы и т.д.);

- если страницы заполнены слишком рыхло, то страниц просто много, а БД просто больше по размеру;

- т.е. компромисс между потерями на вводе-выводе и затратами на разделение страниц.

Золотого сечения тут нет, но в среднем для больших БД выгоднее заполнять страницы плотнее, и наоборот для маленьких БД.

Можно попробовать подкрутить этот параметр, так чтобы для больших БД уже чувствовалась разница, а для маленьких деградация была еще не заметна.

Сейчас я сделаю соответствующие правки, чтобы вы могли попробовать.

А в дальнейшем - вынести в runtime опции, если это даст положительный эффект.

2.

Лучшее сжатие частично можно объяснить рыхлостью страниц, чуть более регулярной структурой и обнуленными хвостами large/overflow страниц.

Тем не менее, для меня тут нет полной ясности.

В том числе, на 13% лучше - это относительно сжатого размера LMDB, или разница в сжатии?

3.

Если на zfs со сжатием работает быстрее, то из-за суммы двух причин:

- сжатие уменьшает I/O bandwidth с диском.

- при чтении блоки по 128К вынуждают page cache работать не с отдельными 4К страниц, а как-бы блоками по 32 страницы.

т.е. это некий micro read ahead, который увеличивает I/O bandwidth, но немного уменьшает IOPS.

- при записи блоки по 128К вынуждают коагулировать все write requests в пределах блока в одну IO-операцию.

т.е. это некий write micro batching, который увеличивает I/O bandwidth, но немного уменьшает IOPS.

Итоговый эффект принципиально зависит от того как много I/O операций удается схлопнуть в одну (от 1 до 32) и от суммарных накладных расходов на одну I/O операцию (как в ядре, так и у диске, включая seek / pagezone switch).

Тем не менее, если вы видите выгоду, то вероятно есть смысл попробовать более крупный размер страниц в БД (8/16/32/64K).

AV

16:44

Artem Vorotnikov

@erthink флаги для sync-режимов, liforeclaim, coalesce и writemap не учитываются при

MDBX_RDONLY?

Л(

17:17

Леонид Юрьев (Leonid Yuriev)

In reply to this message

Их негде/некогда/незачем учитывать если процесс открывает БД только для чтения.

28 February 2021

Л(

15:38

Леонид Юрьев (Leonid Yuriev)

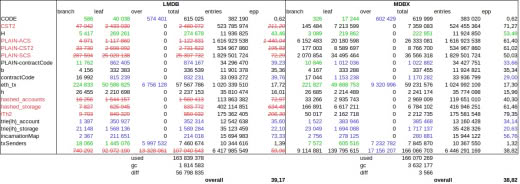

AS

15:43

h,H,b,txSenders - можно убрать. Они изначально были неравными немного и в эксперименте не апдейтились.

Л(

AS

15:46

Alex Sharov

Смотреть нужно на: PLAIN-ACS, PLAIN-SCS, PLAIN-CST2

они в основном обновлялись.

PLAIN-ACS, PLAIN-SCS - через APPEND

PLAIN-CST2 - через UPSERT

они в основном обновлялись.

PLAIN-ACS, PLAIN-SCS - через APPEND

PLAIN-CST2 - через UPSERT

Л(

15:46

Леонид Юрьев (Leonid Yuriev)

Легенда:

- краным/зачеркнутым = там где статистики LMDB нельзя верить из-за ошибок.

- синее = в LMDB менее рыхлые страницы.

- зеленое = в MDBX менее рыхлые страницы.

- колона epp = Entries Per Page

- overall снизу = общее "по больнице" значение EPP.

- краным/зачеркнутым = там где статистики LMDB нельзя верить из-за ошибок.

- синее = в LMDB менее рыхлые страницы.

- зеленое = в MDBX менее рыхлые страницы.

- колона epp = Entries Per Page

- overall снизу = общее "по больнице" значение EPP.

AS

15:47

Alex Sharov

блин 🙂 и они все DUPSORT поэтому lmdb показывает не правду про них

Л(

15:48

Леонид Юрьев (Leonid Yuriev)

In reply to this message

Да, то только когда дубликаты реально есть и выносятся в отдельные nested b-tree

15:50

Важнее overall снизу - там посчитано по используемым страницам, при этом в diff видно "неучтенку":

- для LMDB видим 56M страниц из-за багов;

- для MDBX видим 3.5K потому что здесь не учтены расходы на GC и мелкие таблицы.

- для LMDB видим 56M страниц из-за багов;

- для MDBX видим 3.5K потому что здесь не учтены расходы на GC и мелкие таблицы.

AS

15:54

Alex Sharov

прикольно. я такого никогда не считал.

Л(

17:17

Леонид Юрьев (Leonid Yuriev)

В "сухом остатке" на текущий момент:

1.

Базы разные, в том числе с разной историей и разным кол-ом таблиц.

Заполнение страниц зависит от всех вставок/удалений в истории и не меняется при mdbx_copy.

Поэтому чтобы отвязаться от истории нужно mdbx_dump + mdbx_load, при этом подумать нужен ли append-режим для load (ключик

2.

"В среднем по больнице" заполнение страниц у MDBX и LMDB примерно одинаковое.

При этом у MDBX дерево более выгодное (меньше branch-станиц, больше leaf/overflow = уже сверку, шире снизу).

Но у MDBX намного больше overflow-страниц (на ~15Gb), поэтому суммарно размер больше, т.е. "хотели как лучше, а получилось как всегда" ;)

Это лучше всего видно на таблице

3.

Если отбросить заведомо недостоверную статистику LMDB, но просуммировать разницу по overflow страница и страница в GC/Freelist, то получится (17156207-13328061 + 3632177-1814583)*4/1024/1024 = 22.054 Gb.

Примерно тоже самое если посмотреть на "Number of pages used: 163839378" в LMDB и "Allocated: 169702446" в MDBX, то разница (169702446-163839378)*4/1024/1024 = 22.365 Gb.

Отсюда можно сделать выводы:

- в MDBX получилось на (17156207-13328061)*4/1024/1024 = 14.954 Gb больше overflow страниц.

- скорее всего, часть из этих страниц обновлялась в последнюю транзакции и поэтому предыдущая версия осела в GC: (3632177-1814583)*4/1024/1024 = 7,100 Gb.

- если основная часть эти overflow-данных горячая, то это объясняет нажор RSS.

—-

Итого:

- Похоже что причина увеличения БД и увеличения RSS в большем кол-ве overflow страниц, из которых большинство горячие.

- Я посмотрю почему в MDBX получается больше overflow-страниц, но уже не сегодня.

- При таком кол-ве overflow-страниц, т.е. длинных значений, вам стоит попробовать большие размеры страницы. Причем начать с 64K и двигаться вниз.

P.S.

Размер страницы можно задать вызвав mdbx_env_set_geometry() перед open для еще не существующей БД.

1.

Базы разные, в том числе с разной историей и разным кол-ом таблиц.

Заполнение страниц зависит от всех вставок/удалений в истории и не меняется при mdbx_copy.

Поэтому чтобы отвязаться от истории нужно mdbx_dump + mdbx_load, при этом подумать нужен ли append-режим для load (ключик

-a).2.

"В среднем по больнице" заполнение страниц у MDBX и LMDB примерно одинаковое.

При этом у MDBX дерево более выгодное (меньше branch-станиц, больше leaf/overflow = уже сверку, шире снизу).

Но у MDBX намного больше overflow-страниц (на ~15Gb), поэтому суммарно размер больше, т.е. "хотели как лучше, а получилось как всегда" ;)

Это лучше всего видно на таблице

txSenders.3.

Если отбросить заведомо недостоверную статистику LMDB, но просуммировать разницу по overflow страница и страница в GC/Freelist, то получится (17156207-13328061 + 3632177-1814583)*4/1024/1024 = 22.054 Gb.

Примерно тоже самое если посмотреть на "Number of pages used: 163839378" в LMDB и "Allocated: 169702446" в MDBX, то разница (169702446-163839378)*4/1024/1024 = 22.365 Gb.

Отсюда можно сделать выводы:

- в MDBX получилось на (17156207-13328061)*4/1024/1024 = 14.954 Gb больше overflow страниц.

- скорее всего, часть из этих страниц обновлялась в последнюю транзакции и поэтому предыдущая версия осела в GC: (3632177-1814583)*4/1024/1024 = 7,100 Gb.

- если основная часть эти overflow-данных горячая, то это объясняет нажор RSS.

—-

Итого:

- Похоже что причина увеличения БД и увеличения RSS в большем кол-ве overflow страниц, из которых большинство горячие.

- Я посмотрю почему в MDBX получается больше overflow-страниц, но уже не сегодня.

- При таком кол-ве overflow-страниц, т.е. длинных значений, вам стоит попробовать большие размеры страницы. Причем начать с 64K и двигаться вниз.

P.S.

Размер страницы можно задать вызвав mdbx_env_set_geometry() перед open для еще не существующей БД.

AS

17:24

Alex Sharov

1. более аккуратные данные будут через несколько дней

3. "часть из этих страниц обновлялась в последнюю транзакции" - мне кажется что это не так, потому что работа которую делал процесс после mdb_copy -c писала в DUPSORT таблицы.

4. Эксперимент с бОльшими страницами запущу - когда получу результаты по

3. "часть из этих страниц обновлялась в последнюю транзакции" - мне кажется что это не так, потому что работа которую делал процесс после mdb_copy -c писала в DUPSORT таблицы.

4. Эксперимент с бОльшими страницами запущу - когда получу результаты по

MADVISE

AS

18:15

Alex Sharov

Там наши люди просят перейти на английский. Хотят читать этот чат.

Л(

Л(

20:53

Леонид Юрьев (Leonid Yuriev)

In reply to this message

I duplicated my last answers in (pidgin) English into the issue at github.

https://github.com/erthink/libmdbx/issues/164

https://github.com/erthink/libmdbx/issues/164

f

21:43

fuunyK

thank you!

1 March 2021

AV

18:44

Artem Vorotnikov

is it OK to open (and use) multiple write cursors within the same write transaction?

Л(

18:45

Леонид Юрьев (Leonid Yuriev)

In reply to this message

Yes, of cause.

Including multiple cursors for a particular key-value map.

Including multiple cursors for a particular key-value map.

AV

18:47

Artem Vorotnikov

also all methods are OK to be called from multiple threads, provided that NOTLS/CHECKOWNERS=0 and write tx constructor/destructor is run from the same thread?

Л(

18:50

Леонид Юрьев (Leonid Yuriev)

In reply to this message

Yes, but you MUST NEVER use write txn and/or write cursor(s) from multiple threads simultaneously !

Including close/open cursor(s) !

Including close/open cursor(s) !

AV

18:51

Artem Vorotnikov

In reply to this message

ah, so concurrent, behind mutex, from multiple threads is OK, but parallel is not?

Л(

18:55

Леонид Юрьев (Leonid Yuriev)

In reply to this message

The "concurrent" may be confusing here.

You may use _cooperative_ multi-threading, but no more, i.e. sure not "parallel".

+Oops, I was wrong in terms, correctly say "cooperative multithreading".

You may use _cooperative_ multi-threading, but no more, i.e. sure not "parallel".

+Oops, I was wrong in terms, correctly say "cooperative multithreading".

AV

18:56

Artem Vorotnikov

yeah, I phrased poorly, sorry

basically, it's OK to share write transaction and cursor across threads, as long as I put it behind a mutex

basically, it's OK to share write transaction and cursor across threads, as long as I put it behind a mutex

Л(

AV

18:59

Artem Vorotnikov

a bit of context: I tried using existing Rust LMDB/MDBX binding (heed), but being a dual binding makes it a jack of all trades. Besides, I got negative feedback from some of its users (segfaults), and it also obscures real libmdbx API, and provides its own, which is rather different

19:00

so I returned to porting Mozilla's LMDB bindings, but am also trying to tailor the API specifically to libmdbx guarantees / usage conditions, so that much would be checked by compiler

Л(

19:00

Леонид Юрьев (Leonid Yuriev)

In reply to this message

AFAIK: heed is designed (and tested) for Mellisearch only.

19:05

In reply to this message

👍

In this case, I would like to repeat: Please take a look at the C++ API; I think it is better to repeat modern C++ API in Rust rather than legacy C API, with making changes if necessary (including in the C++sources).

In this case, I would like to repeat: Please take a look at the C++ API; I think it is better to repeat modern C++ API in Rust rather than legacy C API, with making changes if necessary (including in the C++sources).

AV

19:33

Artem Vorotnikov

do I have to manually close databases before closing the environment?

Л(

19:44

Леонид Юрьев (Leonid Yuriev)

In reply to this message

No, if you mean the closing of a DBI-handles.

2 March 2021

AS

04:44

Alex Sharov

Is it possible to achieve reproducible (to match checksum) db files - when loading 2 collections to new db by Append. For example if i will load everything within 1 transaction and then run mdbx_copy -c.

AS

05:07

Alex Sharov

Another theoretical question: is it possible to get any benefits from “forward-only version of GET_RANGE”?

Л(

07:25

In reply to this message

The forward-direction iteration may be little bit faster than reverse.

AS

08:03

Alex Sharov

FYI: we changed our data model - and don't use custom comparators anymore.

Л(

AV

15:43

Artem Vorotnikov

cursors become invalid and cannot be used anymore after dbi is dropped?

Л(

15:46

Леонид Юрьев (Leonid Yuriev)

In reply to this message

In general, yes.

But take look at the

But take look at the

mdbx_cursor_bind().

AV

15:57

Artem Vorotnikov

In reply to this message

bytes written into provided

iovec through mdbx_get also should not be used after dbi drop?

Л(

16:16

Леонид Юрьев (Leonid Yuriev)

In reply to this message

This is generally a more complex question for write transactions case.

- it is the major difference is where the data is located, in a "dirty" or "clean" page.

- a data in the "clean" pages will be valid, since these pages are inside an ancestor (i.e. frozen/committed) MVCC snapshot, which will always be intact within the current transaction.

- a data in a "dirty" pages will become invalid, because after DBI dropping these pages (shadow copies) can be released or reused.

Therefore, in writing transactions, you should carefully work with pointers to data inside the database.

If the data is in a dirty page, it may become invalid in any subsequent operation that changes the contents of the database.

See

- it is the major difference is where the data is located, in a "dirty" or "clean" page.

- a data in the "clean" pages will be valid, since these pages are inside an ancestor (i.e. frozen/committed) MVCC snapshot, which will always be intact within the current transaction.

- a data in a "dirty" pages will become invalid, because after DBI dropping these pages (shadow copies) can be released or reused.

Therefore, in writing transactions, you should carefully work with pointers to data inside the database.

If the data is in a dirty page, it may become invalid in any subsequent operation that changes the contents of the database.

See

mdbx_is_dirty() = https://erthink.github.io/libmdbx/group__c__statinfo.html#ga32a2b4d341edf438c7d050fb911bfce9

16:19

r u got this?

NK

17:11

Noel Kuntze

Hey, I got some questions regarding the C++ API:

1) What's the generally necessary code to open a new env and open an existing map? I didn't find any such method that allows me to make a new env and then open a map relative to it in the C++ API, just in the C API.

2) Why did you keep the implementation details in mdbx.h++ instead of moving them into a seperate header file? That way one needs to look through far fewer lines.

1) What's the generally necessary code to open a new env and open an existing map? I didn't find any such method that allows me to make a new env and then open a map relative to it in the C++ API, just in the C API.

2) Why did you keep the implementation details in mdbx.h++ instead of moving them into a seperate header file? That way one needs to look through far fewer lines.

17:13

Also, are there any projects or public code examples using the C++ API?

AV

17:46

Artem Vorotnikov

In reply to this message

I'm not sure I can cover this cleanly in API contract - I guess I will make the bytes' lifetime dependent on dbi

17:48

also, if write transaction drops dbi, existing read transactions can still work with dbi safely until commit/abort?

AV

18:29

Artem Vorotnikov

In reply to this message

what if I write into an iovec with

mdbx_get, then run mdbx_del for the same key? will an iovec remain valid?

Л(

18:49

Леонид Юрьев (Leonid Yuriev)

In reply to this message

1. https://erthink.github.io/libmdbx/classmdbx_1_1env__managed.html#a1f9cddca614bcccd9033e9f056fc5083

2. I prefer to supply a single header file and an online API description.

2. I prefer to supply a single header file and an online API description.

NK

18:50

Noel Kuntze

I see, ty

Л(

Л(

19:05

Леонид Юрьев (Leonid Yuriev)

In reply to this message

This is the cost of zero-copy.

i.e. we should either always copy data during read (abandon zero-copy like most of storage engines) or check for dirty and make copy conditionally.

i.e. we should either always copy data during read (abandon zero-copy like most of storage engines) or check for dirty and make copy conditionally.

AV

19:11

Artem Vorotnikov

so, API will look roughly like this:

lines represent lifetime dependency

Env

^

|

Txn

^

|

Database <-------\

^ |

| |

Cursor Values (&[u8])

lines represent lifetime dependency

19:12

what this means is that e.g. Txn instance must not outlive Env (commit/abort before closing env)

19:13

the major difference is that Dbi is now more than just a handle, but an entity of its own, to which cursor and values are bound, not to txn

19:14

cannot drop dbi if value slices are still alive, as that would make those slices dangling pointers

AA

19:25

Alexey Akhunov

you meant "Txn must NOT outlive Env" perhaps

AV

Л(

19:27

Леонид Юрьев (Leonid Yuriev)

In reply to this message

Please read my explanations again.

Для ясности я поясню по-русски:

- БД состоит из страниц, в которых размещаются ключи и значения;

- изменения всегда вносятся в копии страницы (CoW, page shadowing);

- не записанные на диск (временные, в ОЗУ) страницы называются "грязными";

- "грязные" страницы обновляются в ОЗУ, а "чистые" на диске не меняются;

- при чтении в iovec возвращается указатель на данные, которые транзакции чтения-записи могут быть как в "чистой" странице, так и в "грязной";

- любая "грязная" страница может быть обновлена или освобождена при последующих изменениях в ходе транзакции;

- указатель в iovec при этом не изменится, но будет указывать на мусор.

Примеры борьбы с этим:

1. см

2. см использование

Для ясности я поясню по-русски:

- БД состоит из страниц, в которых размещаются ключи и значения;

- изменения всегда вносятся в копии страницы (CoW, page shadowing);

- не записанные на диск (временные, в ОЗУ) страницы называются "грязными";

- "грязные" страницы обновляются в ОЗУ, а "чистые" на диске не меняются;

- при чтении в iovec возвращается указатель на данные, которые транзакции чтения-записи могут быть как в "чистой" странице, так и в "грязной";

- любая "грязная" страница может быть обновлена или освобождена при последующих изменениях в ходе транзакции;

- указатель в iovec при этом не изменится, но будет указывать на мусор.

Примеры борьбы с этим:

1. см

struct data_preserver внутри template<> class buffer в https://github.com/erthink/libmdbx/blob/master/mdbx.h%2B%2B#L828 2. см использование

mdbx_is_dirty() внутри libfpta, например https://github.com/erthink/libfpta/blob/master/src/data.cxx#L690-L694

Л(

19:51

Леонид Юрьев (Leonid Yuriev)

На всякий для информации о возможной дополнительной поддержки Rust:

В MithrilDB будет генерализированное безопасное mostly zero-copy чтение, упрощенно:

- любое чтение данных из БД будет требовать предоставление указателя на iovec и callback для копирования данных при необходимости;

- если возвращаемые в iovec данные расположены в грязной странице, то при её модификации будет вызван callback для копирования данных или учета их перемещения;

Технически этот механизм можно бэк-портировать в MDBX, ибо при этом не затрагивается (уже зафиксированный) формат БД.

Но я бы предпочел не тратить на это время без крайней необходимости.

В MithrilDB будет генерализированное безопасное mostly zero-copy чтение, упрощенно:

- любое чтение данных из БД будет требовать предоставление указателя на iovec и callback для копирования данных при необходимости;

- если возвращаемые в iovec данные расположены в грязной странице, то при её модификации будет вызван callback для копирования данных или учета их перемещения;

Технически этот механизм можно бэк-портировать в MDBX, ибо при этом не затрагивается (уже зафиксированный) формат БД.

Но я бы предпочел не тратить на это время без крайней необходимости.

AV

19:58

Artem Vorotnikov

пока попробую извратить API и при этом избежать копирования

19:59

если что, Rust здесь никак не мешает, просто я хочу "казусы" использования перенести из документации в API благо Rust как раз на это заточен

20:00

грубо говоря, весь код на С/С++ - как unsafe Rust, можно получить сегфолт

я же хочу с помощью языковых инструментов языка сделать "неубиваемый" биндинг

я же хочу с помощью языковых инструментов языка сделать "неубиваемый" биндинг

20:00

чтобы без блока unsafe нельзя было получить падение

20:02

чтобы все ошибки при использовании ловились компилятором на уровне очень крутой системы типов

Л(

20:02

Леонид Юрьев (Leonid Yuriev)

In reply to this message

Гипотетически (наверное) можно как-то описать, что прочитанные данные "живут" до следующей модифицирующей операции или до конца транзакции.

Но как это сделать технически в Rust я (откровенно говоря) не знаю.

Поэтому будет любопытно посмотреть на реализацию.

Но как это сделать технически в Rust я (откровенно говоря) не знаю.

Поэтому будет любопытно посмотреть на реализацию.

AV

20:05

Artem Vorotnikov

impl Database {

...

pub fn get<K>(&self, key: &K) -> Result<&[u8]>

where

K: AsRef<[u8]>

...

pub fn del<K>(&mut self, key: &K, data: Option<&[u8]>) -> Result<()>

where

K: AsRef<[u8]>

...

}

...

pub fn get<K>(&self, key: &K) -> Result<&[u8]>

where

K: AsRef<[u8]>

...

pub fn del<K>(&mut self, key: &K, data: Option<&[u8]>) -> Result<()>

where

K: AsRef<[u8]>

...

}

Л(

20:05

Леонид Юрьев (Leonid Yuriev)

На всякий - 50/50 что MithrilDB будет переписан (если так можно сказать про текущие не-публичные PoC-и) на Rust.

Во многом этом зависит от успехов МЦСТ с запуском Rust на Эльбрусах, ну и в целом от явно заявленных потребностей/требований.

Во многом этом зависит от успехов МЦСТ с запуском Rust на Эльбрусах, ну и в целом от явно заявленных потребностей/требований.

AV

20:06

Artem Vorotnikov

In reply to this message

тут всё зависит от портирования LLVM - rustc всего лишь "морда" к нему

Л(

AV

20:06

Artem Vorotnikov

точнее, генератор HIR и MIR - LLVM используется как байтогенератор

20:08

In reply to this message

если по-русски, то

чтобы вызвать

Database::get отдаёт слайс байтов, который "захватывает" ссылку на экземпляр Databaseчтобы вызвать

Database::del нужно взять эксклюзивную ссылку на Database - это невозможно пока живы любые ссылки на этот же экземпляр

20:08

в принципе, +- стандартный API растовых коллекций

Л(

AV

20:19

Artem Vorotnikov

только вот вопрос: если у меня несколько dbi, то операция в одной dbi может инвалидировать данные в грязных страницах другой dbi?

20:25

от этого зависит могу ли я сделать

Database::del/put, который захватит только dbi, или мне нужно делать Transaction::del/put и блокировать всю write-транзакцию

Л(

20:33

Леонид Юрьев (Leonid Yuriev)

Нет, но при должном упорстве можно добиться подобного неподобающим использованием API:

1. добавить key-value запись в

2. создать именоманную subDB (открыть DBI > 1), что косвенно помещает данные в

1. добавить key-value запись в

@MAIN (pre-defined DBI == 1) и прочитать эту запись;2. создать именоманную subDB (открыть DBI > 1), что косвенно помещает данные в

@MAIN, что может вызвать изменение страницы с данными добавленными и прочитанными на первом шаге.

AV

20:37

Artem Vorotnikov

In reply to this message

а почему это неподобающее использование? ) т.е. что-то типа такого может поломаться?

let txn = env.begin_rw_txn();

let mut db = txn.open_db(None);

db.put("hello", "world");

let world = db.get("hello");

txn.open_db(Some("foo")).put("bar", "quux");

println!("world должно равняться {}", String::from_utf8(world).unwrap());

let txn = env.begin_rw_txn();

let mut db = txn.open_db(None);

db.put("hello", "world");

let world = db.get("hello");

txn.open_db(Some("foo")).put("bar", "quux");

println!("world должно равняться {}", String::from_utf8(world).unwrap());

Л(

20:42

Леонид Юрьев (Leonid Yuriev)

In reply to this message

Ну формально`MAIN_DBI` (==0) сейчас не определено в API, поэтому "подабающим" использованием - нельзя.

Но если же сделать как описано всевдокодом - то

Но если же сделать как описано всевдокодом - то

world может "поломаться".

AV

20:58

Artem Vorotnikov

In reply to this message

А, т.е. это не безымянная база (name=NULL в mdbx_dbi_open)?

20:59

Так эта функция вызывается в псевдокоде, в случае foo

Л(

20:59

Леонид Юрьев (Leonid Yuriev)

In reply to this message

Наоборот, она родимая (я уже сам забыл что и так можно...)

AV

21:00

Artem Vorotnikov

Т.е. нельзя использовать безымянную базу при включённых именованных?

21:01

Иначе получается, что это правильное использование API )

Л(

21:03

Леонид Юрьев (Leonid Yuriev)

In reply to this message

Да, выходит что так )

От LMDB унаследовано много "кульбитов", как в API, так и в реализации...

От LMDB унаследовано много "кульбитов", как в API, так и в реализации...

AV

21:04

Artem Vorotnikov

В теории это можно выключить )

А если бы было два именованных dbi?

А если бы было два именованных dbi?

Л(

21:08

Леонид Юрьев (Leonid Yuriev)

In reply to this message

Для именованных DBI взаимовлияние ИСКЛЮЧЕНО:

- создаются вложенные b+tree, с собственной корневой страницей для каждого;

- до коммита транзакции обновления не "всплывают" дальше этих nested roots;

- обновление

- создаются вложенные b+tree, с собственной корневой страницей для каждого;

- до коммита транзакции обновления не "всплывают" дальше этих nested roots;

- обновление

@MAIN (установка актуальных ссылок на nested b+tree для subDB) происходит только при коммите транзакции.

AV

21:11

Artem Vorotnikov

In reply to this message

Понял, спасибо!

В биндингах безымянных таблиц не будет

В биндингах безымянных таблиц не будет

3 March 2021

AV

19:04

Artem Vorotnikov

In reply to this message

подумав ещё больше, я понял, что пользователь может дважды создать

скорее всего выкину zero-cost и &mut в Database - буду при каждом

Database (`mdbx_dbi_open`) и сделать get/put/del в произвольном порядкескорее всего выкину zero-cost и &mut в Database - буду при каждом

get проверять is_dirty, и если да - копировать данные

Л(

19:12

Леонид Юрьев (Leonid Yuriev)

In reply to this message

В MithrilDB функция API открытия DBI будет принимать дополнительный параметр - указатель на пользовательский объект, и возвращать ошибку при попытке открыть DBI с использованием разных указателей.

Т.е. внутри движка будет механизм позволяющий предотвратить создание более одного пользовательского binding-интерфейса к DBI-хендлу.

Т.е. внутри движка будет механизм позволяющий предотвратить создание более одного пользовательского binding-интерфейса к DBI-хендлу.

AV

Л(

AV

19:14

Artem Vorotnikov

ну пользователь открыл таблицу, БД вписала хендл в указатель

его надо тащить через всю программу, потому что если он потеряется, повторно открыть таблицу будет невозможно

его надо тащить через всю программу, потому что если он потеряется, повторно открыть таблицу будет невозможно

Л(

19:21

Леонид Юрьев (Leonid Yuriev)

In reply to this message

Не так:

- если передается ненулевой указатель, то есть экземпляр интерфейсного объекта, который "обёртывает" DBi-хендл в биндингах пользователя, и этот объект связывается с DBI-хендлом.

- внутри биндингов этот объект должен использоваться _вместо_ DBI-хендла, в том числе закрытие хендла должно выполняться посредством разрушения этого объекта.

- движок же просто предотвращает создание двух таких интерфейсных объектов.

- если передается ненулевой указатель, то есть экземпляр интерфейсного объекта, который "обёртывает" DBi-хендл в биндингах пользователя, и этот объект связывается с DBI-хендлом.

- внутри биндингов этот объект должен использоваться _вместо_ DBI-хендла, в том числе закрытие хендла должно выполняться посредством разрушения этого объекта.

- движок же просто предотвращает создание двух таких интерфейсных объектов.

AV

19:23

Artem Vorotnikov

а, ну то есть

let dbi1 = txn.open_db("test")?; // Ok

let dbi2 = txn.open_db("test")?; // Err(Error::Busy)

let dbi1 = txn.open_db("test")?; // Ok

let dbi2 = txn.open_db("test")?; // Err(Error::Busy)

Л(

AV

19:24

Artem Vorotnikov

let dbi1 = txn.open_db("test")?; // Ok

std::mem::drop(dbi1);

let dbi2 = txn.open_db("test")?; // Ok

std::mem::drop(dbi1);

let dbi2 = txn.open_db("test")?; // Ok

Л(

19:24

Леонид Юрьев (Leonid Yuriev)

Да, в том числе при

close вместо drop.

AV

19:25

Artem Vorotnikov

ну да, close забирает весь объект и внутри себя делает drop )

19:26

у меня была идея подумать над split borrows и чёрной магией дженериков

Л(

19:26

Леонид Юрьев (Leonid Yuriev)

In reply to this message

Хм, на всякий - не нужно путать drop (удаление kv-таблицы из БД) и close.

AV

19:29

Artem Vorotnikov

struct Txn {

pub db1: Database,

pub db2: Database,

}

....

Rust позволяет брать mut/const ссылки на разные поля в любой комбинации (при условии что по 1 mut/N const на одно поле) - это бы решило дилемму и позволило бы сохранить zero-copy

но я уже не стал в такие дебри уходить

pub db1: Database,

pub db2: Database,

}

....

Rust позволяет брать mut/const ссылки на разные поля в любой комбинации (при условии что по 1 mut/N const на одно поле) - это бы решило дилемму и позволило бы сохранить zero-copy

но я уже не стал в такие дебри уходить

4 March 2021

AV

17:00

Artem Vorotnikov

что будет если забыть заполнить массив

MDBX_RESERVE, в нём будут случайные данные?

Л(

17:03

Леонид Юрьев (Leonid Yuriev)

In reply to this message

Примерно да, останется то что было до выделения места.

Но могут быть и нули, если была выделена новая страница.

Но могут быть и нули, если была выделена новая страница.

AV

18:01

Artem Vorotnikov

лайфтайм у байтов в чистых страницах mdbx - dbi или вся транзакция? т.е. их можно читать после mdbx_dbi_drop?

Л(

18:04

Леонид Юрьев (Leonid Yuriev)

In reply to this message

Технически можно читать до конца транзакции, так как данные физически расположены в зафиксированном предыдущем MVCC-снимке.

Но логически - это WTF, ибо читаем уничтоженное.

Но логически - это WTF, ибо читаем уничтоженное.

AV

18:06

Artem Vorotnikov

ну я про сами байты, а не mdbx_get )

юзер может захотеть сделать drop, при это продолжить работу с уже прочитанными данными, не копируя их

юзер может захотеть сделать drop, при это продолжить работу с уже прочитанными данными, не копируя их

Л(

5 March 2021

AV

22:39

Artem Vorotnikov

mdbx_cursor_get вернул ENODATA, хотя в доке этой ошибки нет

6 March 2021

AS

08:50

Alex Sharov

If we have read-only db file (will never open for writes) - then it’s safe open 1 tx and never close it (until db close). Yes?

Л(

15:28

In reply to this message

This is reasonable, as long as there are no other processes running writing transactions.

Otherwise you will get https://erthink.github.io/libmdbx/intro.html#long-lived-read

Otherwise you will get https://erthink.github.io/libmdbx/intro.html#long-lived-read

AV

18:33

Artem Vorotnikov

я смог починить почти все ошибки в тестах, и осталась одна - после удаления dbi, снова могу её открыть как ни в чём ни бывало

18:45

точнее так, в рамках транзакции dbi реально удалена (MDBX_NOT_FOUND), но если закоммитить и открыть новую транзакцию - там dbi снова есть, данные на месте

18:52

при этом если в рамках той же транзакции ещё записать данные в другую таблицу, то dbi будет реально удалена

18:53